Synology

external backups pt. II

The worst archivist?

Of course, I know him.

It’s me.

So, some month ago I was writing about how to create and maintain some external backup [link] from data stored on the NAS.

I’ve also put some fancy commands, but when I wanted to do the scheduled refresh of the backup, I noticed, that my ‘documentation’ is without any value.

And this is due to continued iterations of the setup and the used commands, but not updating the documentation.

- Setup

* activate the rsync-access on the DS213 via UI (else ‘permission denied’ is reported even when you can SSH in and check the rsync-version and then wonder what fails ..)

* plug the external HDD to the Raspberry Pi 3B

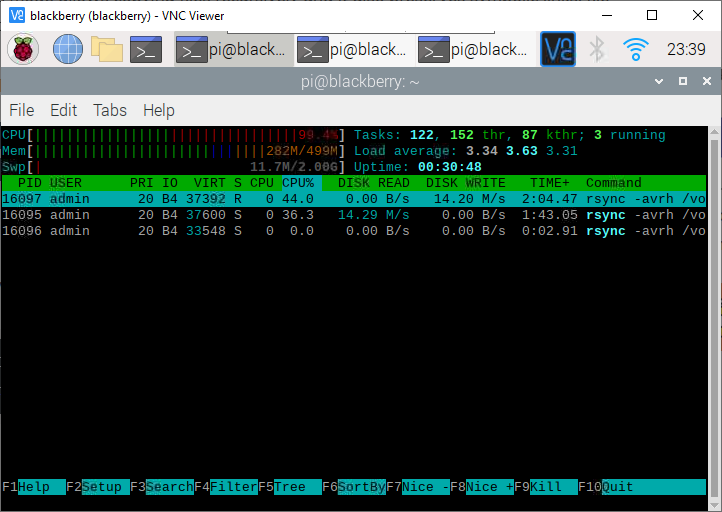

* run via VNC-remote-login in a bash on the RPi

rsync -avrh admin@ds213:/volume1/Photoshare_privat/ /media/pi/1.42.6-25556/Photoshare_privat/ && rsync -avrh admin@ds213:/volume1/homes/Marcel/ /media/pi/1.42.6-25556/homes/Marcel/ && rsync -avrh admin@ds213:/volume1/homes/ruzica/ /media/pi/1.42.6-25556/homes/ruzica/ && rsync -avrh admin@ds213:/volume1/homes/admin/ /media/pi/1.42.6-25556/homes/admin/ && rsync -avrh admin@ds213:/volume1/Camera/ /media/pi/1.42.6-25556/Camera/ && rsync -avrh admin@ds213:/volume1/photo/ /media/pi/1.42.6-25556/photo/ && rsync -avrh admin@ds213:/volume1/Musik/ /media/pi/1.42.6-25556/Musik/

to copy all relevant partitions

‘Kein Backup, kein Mitleid.’

A common German saying meaning “no backup, no pity”. Five weeks ago when another ransomware-wave became public and I saw the disaster with the Western Digital network-drives, I realized that despite using a NAS and regularly backing up data from different devices to this RAID level 1-device, I have no real “hard” backup. In my definition: a 1:1 mirror of the NAS-content which is NOT connected to any network at all and also stored physically in a different room or even different flat. Disconnected because of: are you sure everything is secured? Also no hidden bugs? Physically distant because of: what if the NAS catches fire and the backup is also affected?

So I did a quick review of the current state (NAS is a DS213 with latest updates; offers also USB 3.0-interface; has 2 4 TB drives insides, which are filled to 3.5 TB).

Decided to buy a 6 TB external 3.5″-harddrive (not SSD, because of possible loss of data while powered down over years) for 122 € (compared to the price of losing just a fraction of the photos – Forget it!).

Formatted it to EXT4 with some Linux. Attached then to the Synology DS213. And it was not detected, despite saying it supports EXT4. So we let the DS213 format it again (don’t ask me ..).

Let’s say it outright: I don’t want to use their proprietary backup-solutions. I also don’t want any kind of encryption.

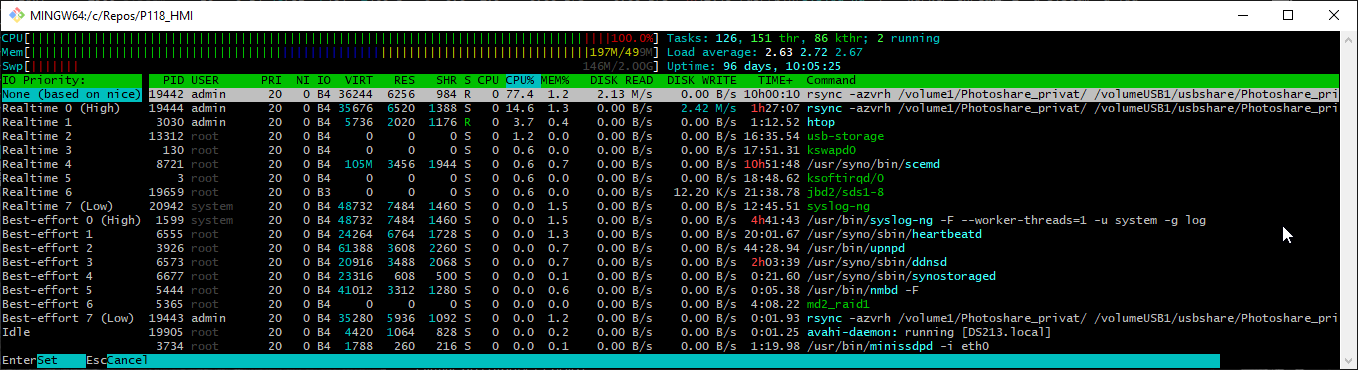

SSH’ed into the DS213 (activated before, because off by default). Then I checked which partitions have to be copied. Puzzled together a chained rsync-command (sorted by priorities) and let it run. Detaching the session via ‘nohup’ Wasn’t working.

|

1 2 3 4 5 6 7 |

rsync -avrh /volume1/Photoshare_privat/ /volumeUSB1/usbshare/Photoshare_privat/ && \ rsync -avrh /volume1/homes/Marcel/ /volumeUSB1/usbshare/homes/Marcel/ && \ rsync -avrh /volume1/homes/ruzica/ /volumeUSB1/usbshare/homes/ruzica/ && \ rsync -avrh /volume1/homes/admin/ /volumeUSB1/usbshare/homes/admin/ && \ rsync -avrh /volume1/Camera/ /volumeUSB1/usbshare/Camera/ && \ rsync -avrh /volume1/photo/ /volumeUSB1/usbshare/photo/ && \ rsync -avrh /volume1/Musik/ /volumeUSB1/usbshare/Musik/ |

Turns out quite quickly that the one core-cpu of the DS213 is the bottleneck, because it runs at 100% and therefore mere writing speeds of 8-9 MB/s are achieved despite the HDD being capable of writing up to 120 MB/s. My back of the napkin-estimation is 4-5 days for all data. Next backups should run faster, because incremental. And most of the data is written once, changed almost never.

Quick summary: I was baffled that despite having some experience in IT did not have a _real_ backup before. And out of discussions with peers I know almost none of those working in IT have either.

The proposed solution will save my family and me from any 100% losses.

edit 20210801: ideas need some time to mature. Now one of the Raspberry Pi 3B takes care of the rsync-calls to relieve the real PC (host RocketChat anyway, therefore is online 24/7) and rsync runs now without compression; the resulting writing speed is now in the range of 11-15 MiByte/s.

Windows (10) doesn’t allow the same resource twice

So, the NAS* I use for years is separated into different partitions. Some for general access and things and one for music, which has a special user, which owns just read-only privileges.

Linux: no problem: mount as many shares with Gigolo and you are done.

Windows: use that 90s-style network-mount of the ‘explorer’ and add share by resource and credentials.

“\\ds213\musik” .. Works, but is awkward and not comfortable.

then you want to mount the second share with different credentials and you get “Can’t mount the same share** with different credentials”.

Workaround:

mount once by resource-name and once by ip: “\\192.168.178.178\musik”

Another approach via the hosts-file.

* DS213 from Synology: 2 bay; now running 24/7 for 5 (?) years; upgraded inbetween from 2 TByte drives to 4 TB with complete replication

** is actually “different shares at the same host”, but who am I with my limited knowledge?

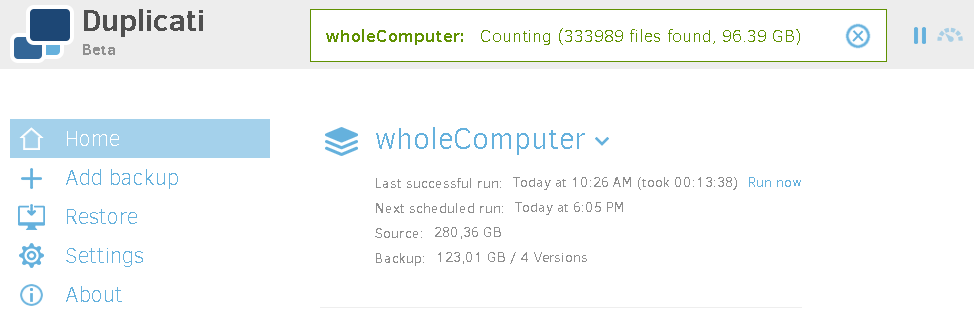

backup often, backup early: Duplicati

I wanted an open source-solution which allows to backup locally and remote certain directories (or whole PC). I found some month ago duplicati and used it for good at work, where it currently backups the content of the whole SSD-partitions to some interal hard disk (not the most secure backup, I know. But given to the constraints still better than no backup at all.)

At home the content of the two home-directories (Linux) is transferred to a shared folder on the Synology-NAS.

duplicati on wikipedia // code under GNU LGPL

I can just repeat: working without a backup is the best path to failure.

In my history as “computer technician” I have ruined several drives and especially when you just want to do a default, simple operation (*cough* move a partion on a hard drive *cough*), everything fails and there is no way to revert back to the original state. Never again 🙂